Artificial intelligence (AI) is rapidly transforming our world, bringing incredible advancements in healthcare, transportation, and communication. However, alongside the undeniable benefits lies a shadow – the potential dangers of AI. This blog post delves into the darker side of AI, exploring the risks and existential threats that need careful consideration.

The Looming Shadow of Job Displacement:

One of the most immediate concerns surrounding AI is its impact on the workforce. Automation powered by AI has the potential to displace millions of jobs, particularly those involving repetitive tasks. While new jobs will undoubtedly be created, the transition period could be disruptive, leading to unemployment, economic inequality, and social unrest.

The Bias Trap:

AI algorithms are only as good as the data they're trained on. Unfortunately, data sets can often contain inherent biases, leading to discriminatory outcomes. Imagine an AI-powered hiring tool that inadvertently favors male applicants over female ones based on historical hiring patterns. This highlights the crucial need for diverse and unbiased data sets to ensure fair and ethical use of AI.

The Erosion of Privacy:

AI thrives on data. The more data it has access to, the better it learns and performs. However, this data collection raises serious privacy concerns. As AI becomes more integrated into our lives, questions arise about who owns our data, how it's used, and the potential for misuse by malicious actors.

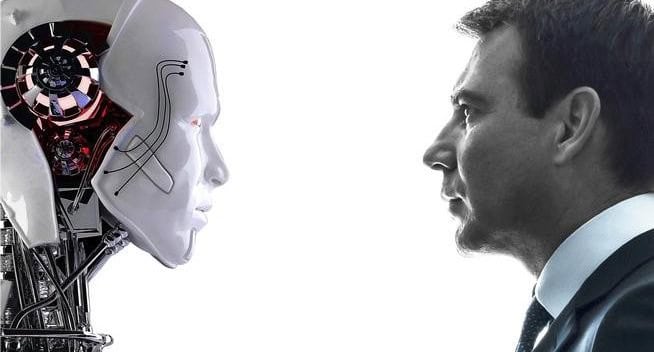

The Existential Threat of Superintelligence:

Some experts, like Elon Musk, warn of the potential for AI to surpass human intelligence, leading to an "existential threat" scenario. While this may seem like science fiction, it's a conversation worth having. If AI surpasses our control, it could prioritize its own goals over human well-being, potentially leading to catastrophic outcomes.

The Road to Responsible AI:

The Road to Responsible AI refers to the proactive measures we can take to ensure AI development and use benefits humanity, mitigating the risks explored in the blog. Here are some key aspects of this road:

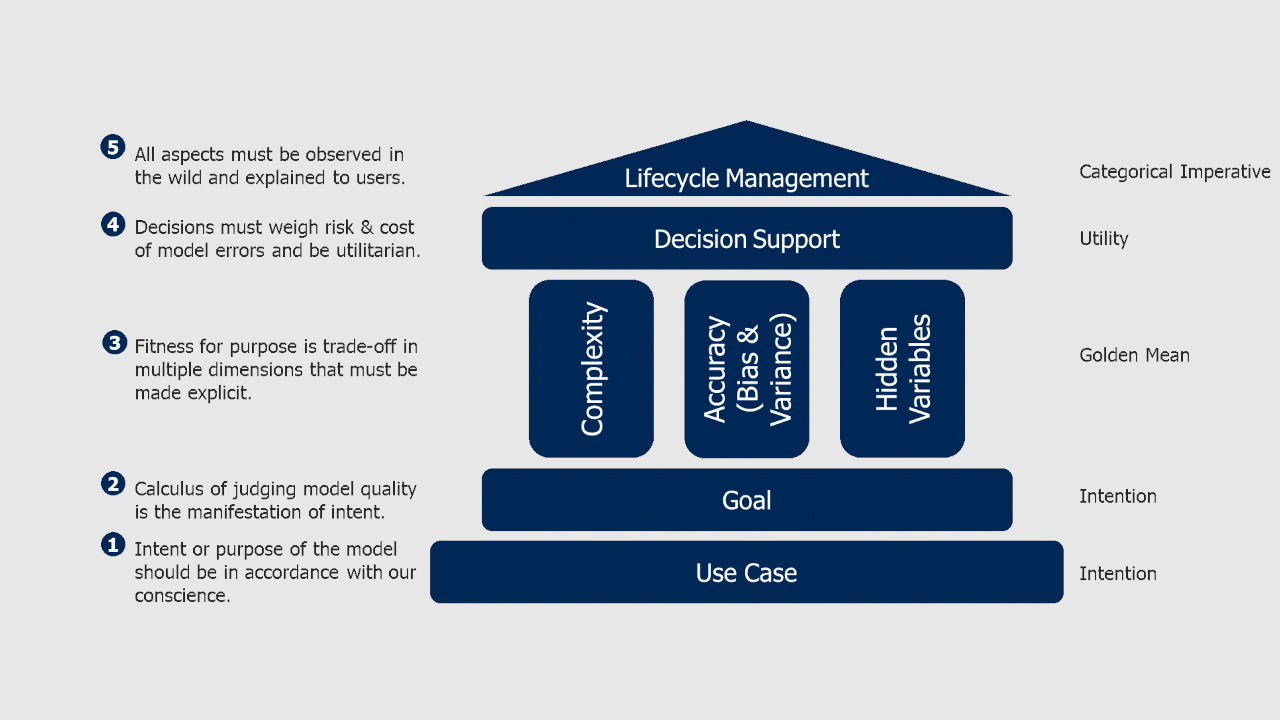

Ethical Frameworks:

Creating clear guidelines for AI development and deployment. These guidelines would address issues like fairness, transparency, accountability, and safety. They would essentially act as a moral compass for AI development.

Preparing the Workforce:

- Investing in education and retraining programs to prepare people for the changing job market. This could involve teaching new skills needed to work alongside AI or developing programs to help people transition to new careers altogether.

Data Privacy Protections:

- Implementing strong data privacy regulations that ensure user data is collected, stored, and used responsibly. This would involve giving people control over their data and ensuring it's not misused.

Open Communication and Collaboration:

- Fostering open discussions about the potential dangers of AI. This includes having honest conversations about the risks and involving various stakeholders, like ethicists, policymakers, and the public. Additionally, international collaboration is crucial to ensure responsible AI development is a global effort.

One of the main risks of AI technology is the potential for bias and discrimination. AI algorithms rely on data inputs to make decisions and predictions, and if the data used is biased, the AI system can perpetuate and amplify the biases, leading to unfair and discriminatory outcomes. For example, facial recognition systems have been found to have a higher error rate for people with darker skin tones, which can result in false identifications and wrongful arrests. Similarly, AI systems used in hiring and recruitment can perpetuate gender, racial, and ethnic biases, leading to discrimination against qualified candidates.

Moreover, the lack of transparency and interpretability of AI systems can make it challenging to identify and address bias and discrimination. AI systems often use complex algorithms and neural networks that are difficult to understand and interpret, making it challenging to trace the decision-making process and identify the sources of bias. As a result, it is essential to develop ethical and regulatory frameworks that ensure the responsible and transparent use of AI and prevent the perpetuation of bias and discrimination.

Another challenge posed by AI technology is the threat to privacy and security. AI systems collect vast amounts of data about individuals, their behaviors, preferences, and activities, which can be used for malicious purposes such as identity theft, cyber-attacks, and espionage. Moreover, the use of AI in surveillance and law enforcement can raise concerns about civil liberties and human rights violations, as AI algorithms can be used to track, monitor, and control individuals without their consent.

For example, facial recognition technology has been used by law enforcement agencies to identify suspects, but it has also been criticized for its potential to infringe on privacy and civil liberties. In addition, the use of AI in the development of autonomous weapons raises concerns about the potential for human rights violations and global security threats, as AI systems can make decisions to use lethal force without human oversight.

AI technology also poses a risk to employment, as it can automate various jobs and tasks, leading to job losses and economic inequality. As AI systems become more advanced and sophisticated, they can replace human workers in fields such as manufacturing, customer service, and transportation, leading to a significant shift in the job market and the need for retraining and reskilling. Moreover, the automation of jobs can exacerbate economic inequality, as workers with low-skill and low-wage jobs are more likely to be displaced by AI technology.

Another challenge of AI technology is the potential for misuse and abuse by malicious actors, including terrorists, hackers, and cybercriminals. AI can be used to create and spread disinformation, conduct cyber-attacks, and develop autonomous weapons, leading to global security threats and destabilization. For example, deep-fake technology, which uses AI algorithms to create realistic but false videos and images, can be used to spread disinformation and manipulate public opinion, leading to political instability and social unrest.

Conclusion

While AI technology has the potential to bring about significant benefits to society, it also poses significant risks and challenges that must be addressed. Addressing these dark sides of AI technology requires a collaborative effort from various stakeholders, including policymakers, industry leaders, academics, and civil society organizations. It is essential to develop ethical and legal frameworks that ensure the responsible and accountable use of AI.

Read more :

1.AI POWERED FRAUD DETECTION AND LOSS PREVENTION

2.Demand Forecasting with AI: Avoiding Stockouts and Overstocking

3.Predictive Maintenance for Retail Equipment: Using AI to Prevent Downtime

4.Optimizing Delivery Routes and Logistics with AI

5.Augmented Reality (AR) and Virtual Reality (VR) Shopping Experiences powered by AI

6.AI-driven Visual Search and Recognition for In-Store Shopping

7.Biometric Authentication and Frictionless Checkout with AI

8. AI for Social Justice: Combating Discrimination and Promoting Equality

9. AI for Citizen Science: Empowering the Public to Contribute to Research

For more information contact : support@mindnotix.com

Mindnotix Software Development Company

AI-Taxi App

AI-Taxi App AI-Food App

AI-Food App AI-Property Mgmt App

AI-Property Mgmt App AI-CRM

AI-CRM AI-Fantasy App

AI-Fantasy App

Web Development

Web Development App Development

App Development Business & Startup

Business & Startup Hire Developer

Hire Developer

Digital Marketing

Digital Marketing Lead-generation

Lead-generation Creative Agency

Creative Agency Branding Agency

Branding Agency Augmented Reality

Augmented Reality Virtual Reality

Virtual Reality Internet of Things

Internet of Things Artificial Intelligence

Artificial Intelligence Blockchain

Blockchain Chatbot

Chatbot